Ran Cheng

Associate Professor

I am currently an Associate Professor (Presidential Young Scholar) with the Department of Data Science & Artificial Intelligence and Department of Computing at The Hong Kong Polytechnic University (PolyU). Previously, I was an Associate Professor with the Department of Computer Science and Engineering at Southern University of Science and Technology (SUSTech). I received the BSc degree from Northeastern University (China) in 2010 and the PhD degree from the University of Surrey (UK) in 2016. Between 2010 and 2012, I spent a cherished period at Zhejiang University as a postgraduate student.

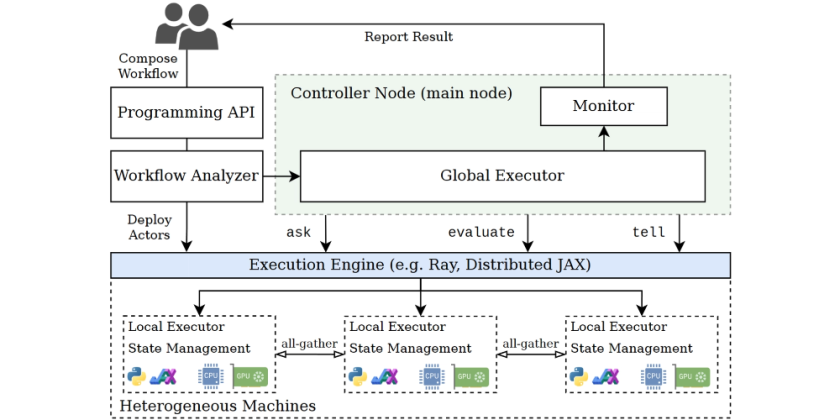

My research philosophy is centered on benefiting humanity by bridging nature and technology. Within the broad domain of Artificial Intelligence (AI), my research focuses on enhancing the evolvability of AI systems, with the goal of developing computational paradigms capable of autonomous learning and continual evolution. My primary research interests include Evolutionary Computation, Representation Learning, and Reinforcement Learning, with an emphasis on developing efficient and scalable algorithms leveraging modern hardware acceleration. As a representative effort, I initiated the EvoX project, aiming at bridging traditional EC methodologies with advanced GPU computing infrastructures.

I am the founding chair of IEEE Computational Intelligence Society (CIS) Shenzhen Chapter. I am serving as an Associate Editor/Editorial Board Member for several journals, including: ACM Transactions on Evolutionary Learning and Optimization, IEEE Transactions on Evolutionary Computation, IEEE Transactions on Emerging Topics in Computational Intelligence, IEEE Transactions on Cognitive and Developmental Systems, IEEE Transactions on Artificial Intelligence, etc.

I am the recipient of the IEEE Transactions on Evolutionary Computation Outstanding Paper Awards (2018, 2021), the IEEE CIS Outstanding PhD Dissertation Award (2019), the IEEE Computational Intelligence Magazine Outstanding Paper Award (2020), and the IEEE CIS Outstanding Early Career Award (2025). I have been featured among the World’s Top 2% Scientists (2020–2024) and the Clarivate Highly Cited Researchers (2023 - 2025). I am a Senior Member of IEEE.

news

| Nov 12, 2025 | I am Named a 2025 Clarivate Highly Cited Researcher |

|---|---|

| Sep 20, 2025 | Our Paper is Accepted by NeurIPS 2025 as Spotlight |

| Aug 07, 2025 | Our EvoGit Wins the First Place of AgentX Competition |

| Dec 16, 2024 | I Joined the Hong Kong Polytechnic Universtiy |

| Jun 01, 2024 | I Received the IEEE CIS Outstanding Early Career Award |